Sometimes less is more: The Rise of Small Language Models

Introduction

Imagine running a language model on your laptop. No API calls. No subscription fees. No worrying about your data leaving your servers. Just pure, unrestricted AI inference running locally.

Accessing powerful language models has always meant paying for cloud APIs, recurring bills, and the requirement to send your data to external servers. But an alternative is possible. Small Language Models (SLMs) enables organizations to run capable AI systems entirely on their own infrastructure.

For certain use cases, local SLMs are necessary: they’re private, fast, and don’t rely on cloud providers. They run offline. They’re cheap at scale. Whether you’re a law firm processing confidential documents, a hospital analyzing medical records, or a robot navigating a factory floor, SLMs enable capabilities that were previously impossible without either massive compute budgets or accepting privacy trade-offs.

In this post, we’ll explore what SLMs are, the different approaches to building them, why they matter, and which models are leading this revolution.

What Are SLMs?

Size and Performance

SLMs are defined by parameter count. More parameters generally mean more capability, but also more memory, computing power, and energy required to run the model. There is no clear consensus but SLMs typically range from 1 billion to 15 billion parameters, models like Microsoft’s Phi-3-mini (3.8B), Meta’s Llama 3 (8B), and Mistral 7B. This smaller size means they can run on consumer hardware like laptops, rather than requiring data center infrastructure.

What makes modern SLMs interesting isn’t the fact they are “small,” but actually their efficiency. Phi-3-mini delivers performance approaching GPT-3.5 (estimated at 175 billion parameters) despite being 46x smaller [3]. Mistral 7B outperforms older 13-billion parameter models across all benchmarks [4].

How We Got Here

Phase 1: The Foundation (2019-2020) GPT-2 (1.5B parameters) demonstrated that language models could generate coherent text. It was impressive, but limited. The path forward seemed clear: scale up.

Phase 2: Bigger is Better (2020-2022) GPT-3 (175B parameters) proved the scaling hypothesis [7]. More parameters plus more data equaled better performance. The industry followed: models grew to 70 billion, 175 billion, even trillion parameters. But alongside this race, researchers began exploring compression—distilling large models into smaller “student” models. These early SLMs, like DistilBERT [6], worked for basic tasks but could never surpass their teachers.

Phase 3: Parallel Paths (2023-Present) Large models continued growing (GPT-4, Claude, Gemini) but a parallel research direction emerged focused on efficiency. Instead of just compressing existing models, researchers discovered multiple ways to build capable small models from scratch: synthetic pedagogical data, architectural innovations, massive-scale data curation, and hybrid training methods. The key insight: efficiency comes from multiple paths, not a single solution.

Key Milestones

From mid-2023 through 2025, a series of releases demonstrated that different paths to efficiency could all work. Each model proved a distinct approach:

- June 2023: Microsoft releases Phi-1 (1.3B parameters), demonstrating that curriculum-based training (teaching concepts progressively like a textbook) beats scale [1]

- September 2023: Mistral 7B (7B parameters) introduced, proving architectural innovation can double efficiency [4]

- December 2023: Phi-2 (2.7B parameters) matches 70B parameter models on reasoning, first time a tiny model beats 10x larger competitors [2]

- December 2023: TinyGPT-V (2.8B parameters) debuts as the first small vision-language model (can process both images and text) bringing multimodal capabilities to resource-constrained devices [35]

- January 2024: Llama 3 (8B parameters) released, trained on 15.6 trillion tokens—showing massive data curation works [5]

- April 2024: Phi-3 family launches (3.8B parameters), approaching GPT-3.5 level performance with 46x fewer parameters [3]

These milestones set the stage for today’s leading models, each proving that different approaches to efficiency can succeed.

Key Models in the SLM Landscape

The SLM ecosystem has matured rapidly, with several models emerging as leaders in different domains. Below is a snapshot of the most influential models as of November 2025, representing different approaches to achieving efficiency and performance.

General-Purpose Models

Phi-3 (Microsoft): Uses synthetic pedagogical data. Phi-3-mini (3.8B) approaches GPT-3.5 (175B) performance on general knowledge (68.8% vs 70.0% MMLU) and excels at mathematical reasoning (82.5% GSM8K)—achieving comparable performance with 46x fewer parameters [3]. Exceptional at math and coding, weaker at creative writing.

Mistral 7B (Mistral AI): Architectural efficiency through Grouped-Query Attention. Delivers 2x better performance than older 13B models on reasoning and comprehension tasks without adding parameters [4]. Optimized for fast deployment.

Llama 3.1 8B (Meta): Trained on 15.6 trillion tokens of curated data with 128K context window—16x larger than Llama 3’s 8K, enabling analysis of full-length documents. Exceeds Phi-3-mini on general knowledge (69.4% vs 68.8% MMLU) and mathematical reasoning (84.5% vs 82.5% GSM8K) while excelling at creative writing and broad knowledge [5]. Widely used as a base for fine-tuning.

Qwen 3 (Alibaba Cloud): A family of models ranging from 0.6B to 235B parameters, trained on 36 trillion tokens across 119 languages. The smaller models (0.6B-7B) excel at multilingual tasks and can process up to 128K tokens in a single conversation—about 4x more than GPT-4o’s 32K limit, enabling analysis of longer documents [34].

SmolLM3 (HuggingFace): A 3B model trained on 11.2 trillion tokens, achieving state-of-the-art performance at the 3B scale—outperforms Llama 3.2 3B and Qwen2.5 3B while remaining competitive with 4B alternatives. Features dual-mode reasoning (can switch between fast responses and extended thinking), 128K context window, and multilingual support for 6 languages. Uniquely provides the complete open training recipe, making it ideal for researchers and developers building custom models [36].

Specialized Models: Why Fine-Tuning Matters

General-purpose models can be fine-tuned for specific tasks, often outperforming much larger models:

WizardCoder: A 15B specialized model outperforms ChatGPT-3.5 on code generation tasks and approaches GPT-4’s coding capabilities [31, 32]—demonstrating that task-specific training enables a 15B model to compete with GPT-4 (estimated at 1.7 trillion parameters [32]) at a fraction of the cost.

MedS (John Snow Labs): An 8B medical model outperforms GPT-4o on clinical summarization, information extraction, and medical question answering [33]. Achieves higher factuality and nearly 2x preference from medical experts compared to GPT-4o. Enables HIPAA-compliant clinical decision support running entirely on-premise—impossible with cloud APIs that transmit patient data externally.

Why SLMs Matter

SLMs matter for three reasons: economics, offline capability, and privacy. Let’s break each down.

The Economics of Ownership vs. Subscription

SLMs offer a fundamentally different cost model compared to cloud LLMs:

- Download an open-source base model like Phi-3 or Llama 3 8B ($0)

- Fine-tune on proprietary data using a single GPU ($3-5K one-time) [Optional]

- Deploy on-premise with zero inference cost per query

- Break-even in 2-3 months for high-volume applications vs. perpetual cloud API fees

This shifts your AI from a recurring expense to an owned asset you control and audit.

Offline Capability:

Cloud LLMs require constant internet connectivity. SLMs run entirely offline.

Consider these scenarios where internet access is unreliable or impossible:

- In-car navigation systems in areas with poor cellular coverage

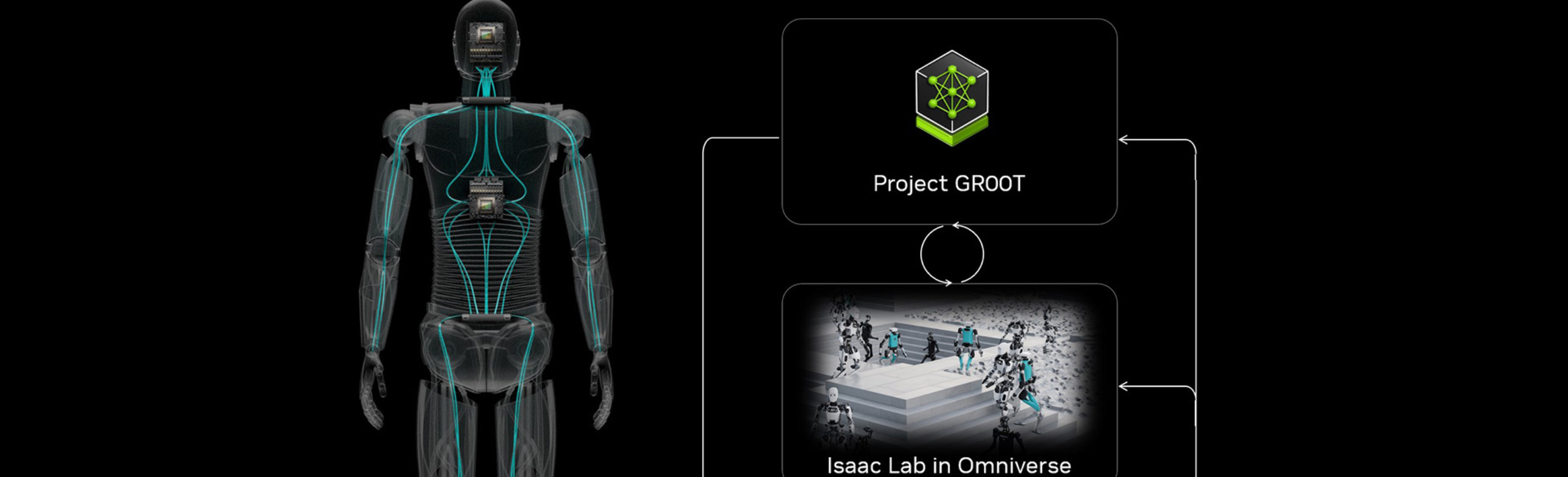

- Factory robots in industrial facilities with restricted network access

- Medical devices in remote clinics without reliable internet

For these use cases, SLMs are the only option. A local Phi-3-mini can run on consumer-grade hardware (MacBook M2, RTX 3060) with zero dependency on external services, generating responses immediately without waiting for network calls.

Privacy:

This might be the most important reason. In many industries, sending data to a cloud API is not a possibility:

- Healthcare: Transmitting patient medical history (PHI) to a public API violates HIPAA

- Finance: Sharing proprietary trading algorithms or customer financial data violates GLBA

- Legal: Sending privileged client communications to an external service breaks attorney-client confidentiality

- Europe: Any PII transfer outside the EU faces strict GDPR scrutiny

For these sectors, local SLMs become the practical choice. Privacy and regulatory requirements make on-premise models the natural fit for industries like healthcare, finance, legal, and defense.

Key Training Paradigms: Multiple Paths to Efficiency

The efficiency revolution didn’t emerge from a single breakthrough. Instead, different research teams discovered distinct approaches to making smaller models more capable. Understanding these paradigms is useful whether you’re training a model from scratch or fine-tuning an existing one for your specific needs.

Paradigm 1: Synthetic Pedagogical Data (Microsoft Phi)

Philosophy: Quality curriculum beats quantity of data.

Microsoft’s Phi series treats model training like education, using synthetic “textbook-style” data designed to teach concepts progressively rather than massive amounts of raw internet text.

Results: Phi-3-mini approaches GPT-3.5 performance (68.8% vs 70.0% MMLU) with 46x fewer parameters [3].

Trade-off: Exceptional at academic reasoning, math, and code. Weaker at pop culture knowledge and creative writing.

Paradigm 2: Architectural Efficiency (Mistral)

Philosophy: Smarter architecture enables better performance per parameter.

Mistral AI innovated on how the model processes information internally. Instead of having every part of the model look at all the data equally (traditional attention), they use Grouped-Query Attention where groups of processing units share information more efficiently, and Sliding Window Attention, where the model focuses on nearby context rather than everything at once. This allows more effective information processing with fewer parameters.

Results: Mistral 7B delivers 2x better performance than older 13B models without adding parameters [4].

Trade-off: Requires deep technical expertise to implement. Benefits appear primarily at deployment, not during development.

Paradigm 3: Scale + Curation (Meta Llama 3)

Philosophy: Massive scale works if you curate intelligently.

Meta’s Llama 3 embraces scale with intelligent filtering, training on 15.6 trillion tokens of carefully curated real-world data rather than synthetic data or architectural redesign.

Results: Llama 3 8B approaches GPT-3.5 on general knowledge (69.4% vs 70.0% MMLU) and excels at mathematical reasoning (84.5% GSM8K) [5]. Excels at creative writing and broad knowledge.

Trade-off: Requires massive computational resources for training. Less specialized than Phi on academic tasks, but more versatile overall.

The right paradigm depends on your use case: pedagogical data for math/reasoning, architectural efficiency for deployment speed, or scale + curation for general-purpose versatility. These approaches matter whether you’re selecting a pre-trained model or deciding how to fine-tune one for your specific application.

Key Takeaways

When to Use SLMs Instead of Cloud LLMs

Consider SLMs when you need offline capability, data privacy, or cost control at scale. If you’re processing confidential documents, operating in low-connectivity environments, or running high-volume inference where API costs compound quickly, local SLMs provide the necessary capabilities while keeping data and costs under your control.

Fine-Tune for Your Specific Task

If you have a well-defined use case and quality domain data, fine-tuning a small model will likely outperform using a general-purpose LLM. WizardCoder (15B) beats ChatGPT-3.5 on code generation. MedS (8B) beats GPT-4o on clinical tasks. The pattern is consistent: download a base model like Llama 3 8B, fine-tune on your proprietary data, and deploy it locally with zero per-query fees. This approach works when your task is specific and repeatable.

Match the Model to Your Use Case

Different models excel at different tasks:

- Math and reasoning tasks: Use Phi-3-mini, its pedagogical training delivers strong performance on logical and mathematical problems

- Fast deployment and inference: Use Mistral 7B, its architectural efficiency optimizes for speed

- General-purpose applications: Use Llama 3 8B, its broad training provides versatility and is widely used as a fine-tuning base

- Multilingual applications: Use Qwen 3, trained on 119 languages with strong cross-lingual capabilities

Start with a pre-trained model for general tasks. For specialized applications, fine-tune on your domain data. The key is matching the model’s strengths to your specific requirements.

.png)