.png)

From Words to Actions: The Rise of Vision-Language-Action Models in Robotics

1. Introduction

Only a few years ago, the idea of telling a robot what to do in plain language — and having it understand, perceive its surroundings, and execute the task — felt like science fiction. The journey began with Large Language Models (LLMs), which transformed our ability to process and generate human language. Vision-Language Models (VLMs) soon followed, fusing visual perception with natural language understanding so AI systems could reason jointly about what they see and what they’re told. Yet for robotics, perception and conversation weren’t enough — acting in the real world requires converting sensory and language inputs into precise, coordinated motor commands.

This is where Vision-Language-Action (VLA) models come in. VLAs don’t just describe images or follow instructions — they directly generate robot actions in real time. By combining the reasoning abilities of VLMs with control policies for physical systems, VLAs unify perception, understanding, and execution into a single pipeline. This integration enables general-purpose robots that can handle diverse tasks, adapt to new environments, and operate across different robot platforms without retraining from scratch, paving the way for zero-shot task execution and faster development cycles.

In this blog, we’ll explore the architectures that are driving state-of-the-art VLA systems, starting with the original Diffusion Policy and moving to large-scale implementations like Pi₀ from Physical Intelligence and GR00T N1 from NVIDIA. We’ll also see how Hugging Face’s SmolVLA brings these capabilities to smaller labs and startups, and how the LeRobot library makes it possible for anyone to train, evaluate, and deploy VLA models. Finally, we will examine Gemini Robotics 1.5, the latest State-of-the-Art model for robotics. This model integrates a plan generation system for the physical world with a VLA for plan execution.

By the end, you’ll have a clear picture of where the field stands — and where it’s heading.

2. Diffusion-Based Architectures for VLA in Robotics

The Diffusion Policy framework reframes robot control as a conditional denoising process in the action space. Instead of predicting the next action directly, the model begins with a noisy action sequence and iteratively refines it using a learned gradient field, conditioned on recent visual observations. This has several practical benefits: it naturally models multimodal action distributions (capturing multiple valid ways to solve a task), handles high-dimensional outputs by predicting entire action sequences at once, and avoids the instability of many energy-based methods by sidestepping the need for explicit normalization.

In practice, Diffusion Policy is implemented with either CNN-based or transformer-based noise-prediction networks, the latter particularly effective for tasks requiring rapid, fine-grained action changes. It uses receding horizon control, where the model predicts a longer sequence of future actions but only executes the first few before replanning, balancing responsiveness with temporal consistency. Visual conditioning is handled efficiently by encoding the observation once and reusing that embedding across denoising steps, reducing computation and enabling real-time inference.

.png)

Pi₀ from Physical Intelligence builds directly on these principles, replacing discrete diffusion steps with flow matching, a continuous-time formulation that learns a vector field mapping noisy actions toward clean ones. This shift allows for higher control rates (up to 50 Hz), making it particularly effective for dexterous tasks such as folding laundry or assembling objects. As shown in Figure 1, Pi₀ uses a pretrained VLM backbone (e.g., Paligemma) to process multi-camera RGB inputs and natural language instructions, along with proprioceptive robot state. Its action expert module — a transformer trained with flow matching — generates continuous action chunks, which can be adapted to a variety of embodiments thanks to cross-embodiment training over data from single-arm robots, dual-arm manipulators, and mobile platforms. In experiments, Pi₀ has demonstrated both strong zero-shot generalization and rapid adaptation through fine-tuning on high-quality task-specific datasets.

.png)

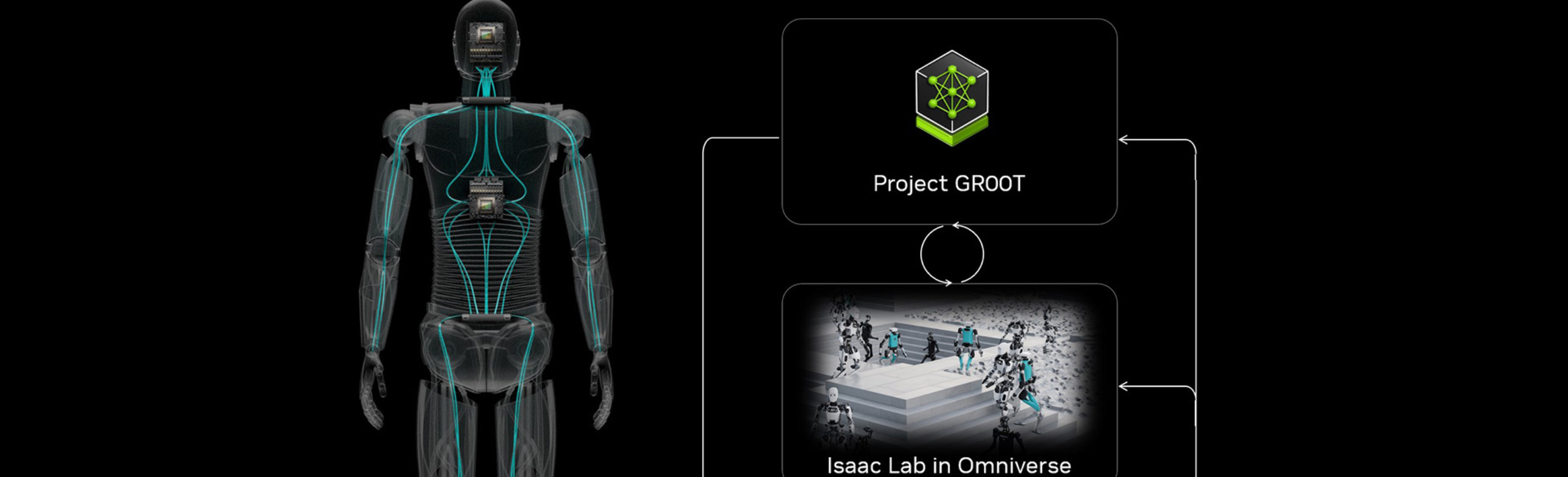

As it can be seen in Figure 2, NVIDIA’s GR00T N1 extends these ideas into a dual-system architecture. System 2 is a high-capacity VLM (Eagle-2) that processes vision and language inputs at 10 Hz, forming a semantic understanding of the task and environment, this understanding is encoded into a token array that is consumed downhill. System 1 — the action layer — is a Diffusion Transformer trained with flow matching to generate closed-loop motor commands at 120 Hz. These modules are jointly trained end-to-end, ensuring tight coupling between high-level reasoning and low-level actuation. GR00T N1’s training leverages a data pyramid:

- Web-scale visual and language data, plus human egocentric videos, provide general priors.

- Synthetic data from physics simulation and generative models expand coverage and variety.

- Real-robot demonstrations ground the model in physical execution.

The result is a single model that can perform language-conditioned manipulation across robot arms, dexterous hands, and full humanoids, achieving state-of-the-art results on simulation benchmarks and promising real-world deployments.

3. SmolVLA and the LeRobot Ecosystem

While Pi₀ and GR00T N1 push the limits of scale and capability, SmolVLA takes a different approach: make VLA technology affordable, efficient, and fully open-source. Developed by Hugging Face, SmolVLA is a compact model (~450M parameters) that runs comfortably on a single consumer GPU or even a CPU, without sacrificing the architectural benefits of larger VLAs.

.png)

The model retains the VLM + flow-matching action expert design, but with careful optimizations:

- SmolVLM-2 Backbone – an efficient multi-image VLM using SigLIP for vision encoding and SmolLM2 for language decoding, optimized for fewer tokens per image via pixel shuffle.

- Layer Skipping – computation stops at the halfway point of the VLM, using intermediate-layer features that are often more effective for control tasks, cutting inference time in half.

- Reduced Visual Tokens – only 64 tokens per frame, avoiding high-resolution tiling for faster processing.

- Asynchronous Inference – decouples perception/action prediction from action execution, allowing the robot to maintain a high control rate even when perception is slower.

- Interleaved Attention in the Action Expert – alternates cross-attention (to condition on VLM features) and self-attention (to model temporal dependencies between actions), a design that proved efficient without losing accuracy.

SmolVLA’s training data is also notable: fewer than 30,000 episodes, all from community-contributed datasets collected on affordable robots. This makes the model’s capabilities highly reproducible for small labs, educators, and hobbyists — a sharp contrast to the tens of thousands of hours of proprietary data behind models like Pi₀ and GR00T.

Also, the LeRobot library from HuggingFace is the connective tissue that turns SmolVLA from a research artifact into a usable tool. Through LeRobot’s API and GitHub repository, you can:

- Load pretrained models like SmolVLA with a single command.

- Fine-tune them on your own robot’s data, with built-in dataset adapters.

- Evaluate policies in simulation or on hardware without rewriting the core training loop.

- Share trained models publicly, benefiting from a common ecosystem of reproducible experiments.

In short, SmolVLA plus LeRobot represents a low-barrier entry point into VLA research and deployment — the same architectural concepts that power multi-million-dollar research programs, now available to anyone with a modest compute budget.

4. Agentic Robotics: going one step further with Gemini Robotics 1.5

While Pi₀ and GR00T N1 integrate reasoning and action within unified architectures, Gemini Robotics 1.5 from Google DeepMind takes a fundamentally different approach: separating high-level reasoning from low-level control through a dual-model agentic system. This architectural decision addresses a critical challenge in robotics — complex, multi-step tasks require both abstract planning (like understanding recycling rules or packing a suitcase) and precise motor execution, capabilities that are difficult to optimize within a single model.

The system consists of two specialized models that work in tandem:

Gemini Robotics-ER 1.5 functions as the high-level orchestrator. This model excels at spatial understanding, task planning, progress estimation, and can natively call external tools like Google Search to gather information needed for task completion. For instance, when asked to sort objects into recycling bins based on local guidelines, the orchestrator searches for relevant rules, understands the current scene, and breaks down the task into executable steps. For example it can transform a general instruction like “sort the trash into the recycle bins” into a set of specific steps like “pick the red can”, “put the red can in the black bin”, etc. It achieves state-of-the-art performance across 15 embodied reasoning benchmarks and demonstrates strong capabilities in complex pointing tasks, success detection, and multi-view spatial understanding.

Gemini Robotics 1.5, the VLA component takes the set of tasks and process them so the robot can execute them. For doing that, it introduces a breakthrough feature: embodied thinking. Unlike traditional VLAs that directly map instructions to actions, this Thinking VLA interleaves actions with a multi-level internal reasoning process expressed in natural language, enabling the robot to "think before acting." When the VLA receives an instruction from the orchestrator (such as "pick up the blue sweater"), it generates an internal monologue of primitive motions expressed in natural language (like "move the gripper to the left" or "close the gripper") before executing them. This thinking process helps the model decompose instructions into shorter segments that correspond to a few seconds of robot movement each, and also makes the robot's behavior more interpretable to the user. The result is not only improved task performance on multi-step tasks but also transparency: users can see the VLA's motion-level reasoning process in real time, enhancing trust and debuggability.

A second major innovation is Motion Transfer (MT), which enables the model to learn across heterogeneous robot embodiments. Through a novel architecture and training recipe, GR 1.5 can control multiple robots — including the ALOHA bimanual platform, Bi-arm Franka, and Apollo humanoid — without robot-specific post-training, and even demonstrates zero-shot skill transfer between different platforms. Tasks trained only on ALOHA can execute successfully on the Apollo humanoid and vice versa, dramatically reducing the data burden for new robot platforms.

The architectural separation proves its value in long-horizon tasks. In evaluations comparing GR 1.5's agentic system against baselines using off-the-shelf VLMs like Gemini 2.5 Flash as orchestrators, the GR 1.5 Agent achieved nearly double the progress score on complex tasks, with particularly strong improvements in task planning. Failure analysis revealed that planning errors decreased from 25.5% to 9% when using GR-ER 1.5 as the orchestrator, underscoring the critical importance of specialized embodied reasoning for reliable physical agents.

Key Differences from Pi₀ and GR00T N1:

- Explicit separation of reasoning and action rather than tight coupling in a single system

- Tool use at the orchestration level, enabling robots to search the web, access APIs, or call custom functions mid-task

- Natural language thinking traces that make the robot's decision-making process interpretable

- Cross-embodiment learning that transfers skills between radically different robot forms without retraining

This dual-model design philosophy reflects a pragmatic insight: general-purpose robotics requires both sophisticated world understanding and robust motor control, but these capabilities may be best developed as specialized components that collaborate rather than compete for representational capacity within a single model.

5. Conclusion

The journey from Diffusion Policy to today's state-of-the-art VLA systems reveals a field rapidly converging on general-purpose robotics. What began as a novel approach to action generation through denoising models has evolved into complete systems capable of perceiving, reasoning, and acting in complex real-world environments.

We can take key points from each of the models and architectures that we saw:

- Diffusion Policy: Pioneered action generation as iterative denoising, enabling multimodal distributions and temporal consistency through receding horizon control.

- Pi₀: Achieves 50 Hz control with flow matching, demonstrating strong cross-embodiment generalization through unified VLM backbone and action expert design.

- GR00T N1: Pushes scale with dual-system architecture, leveraging a data pyramid from web-scale to real demonstrations.

- SmolVLA: Prove VLA capabilities are accessible at small scale, running on consumer hardware and training with <30K episodes using LeRobot.

- Gemini Robotics 1.5: Separates reasoning from execution, enabling tool use, web search, and interpretable decision-making; achieves zero-shot skill transfer across radically different embodiments through Motion Transfer.

Each system makes different trade-offs between integration, scale, and specialization, but all share common foundations: vision-language backbones, diffusion or flow-based action generation, and multi-embodiment training. As these approaches mature and converge, we're witnessing the emergence of truly general-purpose robots—systems that can perceive their environment, reason about complex tasks, and execute dexterous manipulation across diverse platforms. The path forward is clear: richer cross-embodiment data, better architectures for combining reasoning and control, and open frameworks that make these capabilities accessible to the entire robotics community.

.png)